Just post all the code instead of the compiler output… If it's not too much I'll have a look.

🎉 Celebrating 25 Years of GameDev.net! 🎉

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

Direct3D 11 Textures not working

Here's the HLSL:

#coffee vs_layout(VSInput)

struct VSInput

{

float3 Position : POSITION;

float2 TexCoord : TEXCOORD;

};

struct PSInput

{

float4 Position : SV_POSITION;

float2 TexCoord : TEXCOORD;

};

PSInput VSMain(VSInput input)

{

PSInput output;

output.Position = float4(input.Position, 1.0);

output.TexCoord = input.TexCoord;

return output;

}

Texture2D MainTex;

SamplerState SampleType;

float4 PSMain(PSInput input) : SV_TARGET

{

return MainTex.Sample(SampleType, input.TexCoord);

}And Here's the Input Layout Creation:

std::string inputLayoutBlock = FileUtils::GetBlock(FileUtils::FindToken(src.c_str(), m_PreProcessorDirectives["vs_layout"]), nullptr);

std::vector<std::string> inputLayoutTokens = FileUtils::Tokenize(inputLayoutBlock);

unsigned int index = 2;

std::vector<D3D11_INPUT_ELEMENT_DESC> inputElements;

while (index < inputLayoutTokens.size())

{

if (inputLayoutTokens[index] == "}")

break;

std::string type = inputLayoutTokens[index];

index += 3;

std::string name = inputLayoutTokens[index++];

name.erase(std::remove(name.begin(), name.end(), ';'), name.end());

char* semanticName = new char[name.length() + 1];

strcpy(semanticName, name.c_str());

D3D11_INPUT_ELEMENT_DESC inputElement;

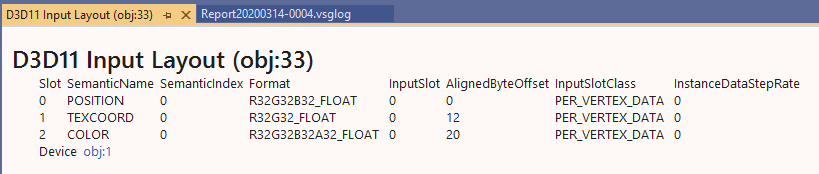

inputElement.SemanticName = semanticName;

inputElement.SemanticIndex = 0;

inputElement.Format = ShaderDataTypeToDXGIFormat(StringToShaderDataType(type));

inputElement.InputSlot = 0;

inputElement.AlignedByteOffset = D3D11_APPEND_ALIGNED_ELEMENT;

inputElement.InputSlotClass = D3D11_INPUT_PER_VERTEX_DATA;

inputElement.InstanceDataStepRate = 0;

inputElements.push_back(inputElement);

}

HRESULT result = DX11Context::GetDevice().CreateInputLayout(inputElements.data(), inputElements.size(), vertexByteCode->GetBufferPointer(), vertexByteCode->GetBufferSize(), &m_VertexLayout);

COFFEE_LOG_INFO(result);I'm just reading the different parts of the actual float3 and float2 declarations and determining the D3D11_INPUT_ELEMENT_DESC values based on those. And yes, I've checked that I'm using the correct Format for each variable

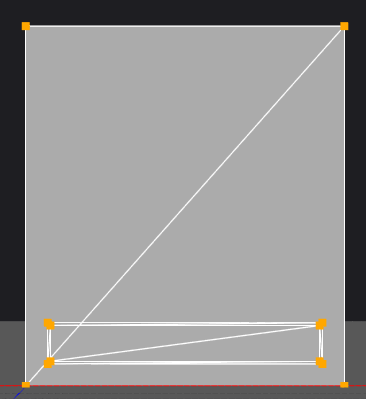

Alright so I just checked the Pipeline Stages for the draw call from the last graphics debugging report, and for some reason the Pixel Shader Stage doesn't run? I'll have to look into it a bit more.

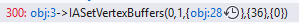

Debug mode warns you about this by the way (if the stride is too small), though it's not an error and you can actually use this behavior if you want to.

peter1745 said:

Hang on… I think I messed up and hardcoded the vertex buffers stride…

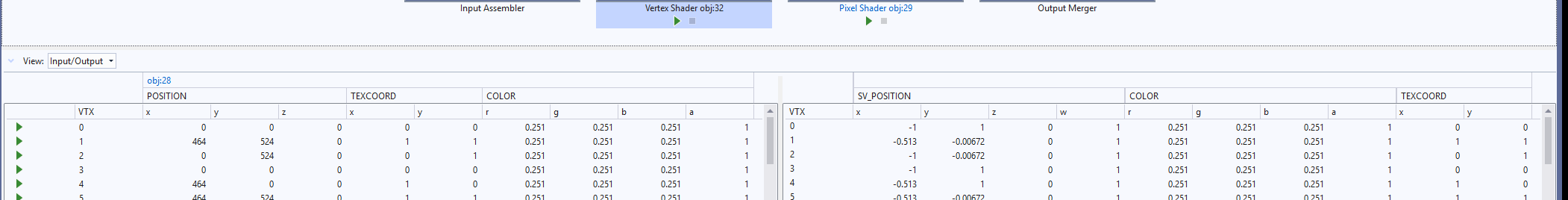

If you select the “vertex stage” or the “input assembler” stage, you should see if this is the case in how it interpreted the data:

Generally if the input/output of the input assembler or vertex shader doesn't appear to display properly in VS, something is set wrong as it does a reasonable job of understanding the common layout elements.

And you can inspect pretty much any object, like the input layout.

And any call in the frame.

@Beosar I'm going to have to look into debug info and general debugging with regards to Direct3D a bit more then.

@syncviews Yeah I noticed that, it seems incredibly useful and that's actually how I noticed the stride issue since I noticed that certain parts of the vertex positions ended up in the texture coordinate variable in the shader.