I am currently doing a plugin for UE similar to substance painter and I am creating my post in a more general fashion oriented to DirectX, since is more adequate.

On my development I am creating a shader that extract all PBR attributes from materials on UE and then I am storing such information in multiple render targets at the same time, I choose to use a similar layout to the one used by UE itself, here is the snipped code so you understand:

float Metallic = GetMaterialMetallic(PixelMaterialInputs);

float Specular = GetMaterialSpecular(PixelMaterialInputs);

float Roughness = GetMaterialRoughness(PixelMaterialInputs);

float Anisotropy = GetMaterialAnisotropy(PixelMaterialInputs);

float3 Emissive = GetMaterialEmissive(PixelMaterialInputs);

float Opacity = GetMaterialOpacity(PixelMaterialInputs);

#if MATERIALBLENDING_MASKED || (DECAL_BLEND_MODE == DECALBLENDMODEID_VOLUMETRIC)

Opacity = GetMaterialMask(PixelMaterialInputs);

#endif

float3 Normal = GetMaterialNormal(MaterialParameters, PixelMaterialInputs);

float3 WorldTangent = GetMaterialTangent(PixelMaterialInputs);

float4 Subsurface = GetMaterialSubsurfaceData(PixelMaterialInputs);

float4 Offsets = Input.SavedWorldPositionOffsets;

float Height = length(Offsets.rgb);

uint ShadingModelId = GetMaterialShadingModel(PixelMaterialInputs);

//~ Support all types of materials, for now we only support default lit mode

if(ShadingModelId == SHADINGMODELID_DEFAULT_LIT)

{

OutputA = float4(BaseColor.rgb, Opacity);

OutputB = float4(Metallic, Roughness, Specular, 1.0);

OutputC = float4(Normal.rgb, Height);

OutputD = float4(WorldTangent.rgb, Anisotropy);

OutputE = float4(Emissive.rgb, 1.0);

//OutputF = float4(0.0, 0.0,0.0,0.0); // not use

}As you can see I am storing several channels on different render targets, named from A to F, at this point is all good, but then I realize that for the layer blending I will need alpha information for each and DirectX MRT only supports 8 render targets each call. My idea for the layer blending is very simple, my textures can be UnnorderedViewAccess so I am simply planning to call a compute shader for each layer until I blend them all . Maybe I could do a cache where I can render until the last active layer and then continue blending from there everytime I paint.

But what is really killing me is the alpha storage.

So I have 3 ideas, the first one will be simply attach some other textures and store the alphas there, I could attach 2 more textures and have 8 channels (8 bits RGBA x 2) and then I can use the remaining alphas from OutputB and OutputE to have the necessary 10 alphas I am using for my example. The render pass will be extremely heavy but I don't see any other way.

My second idea will be calling a second render pass just for the alphas. but it is kinda the same I guess, I will be just splitting the workload on 2 passes with less MRT, although I am not an expert so maybe that has some advantage.

And my third option is not to use alphas at all and work over the assumption that if a value is not equal to the default in a respective channel, then is solid, if the value is equal to the default value then is alpha. This maybe could work and I could save a ton of hard drive, but I think I won't be able to have interpolations when blending different layers.

So I hope you guys can give some insights about how could something like that be implemented, I would like to see how substance painter is done, but I afraid is not possible.

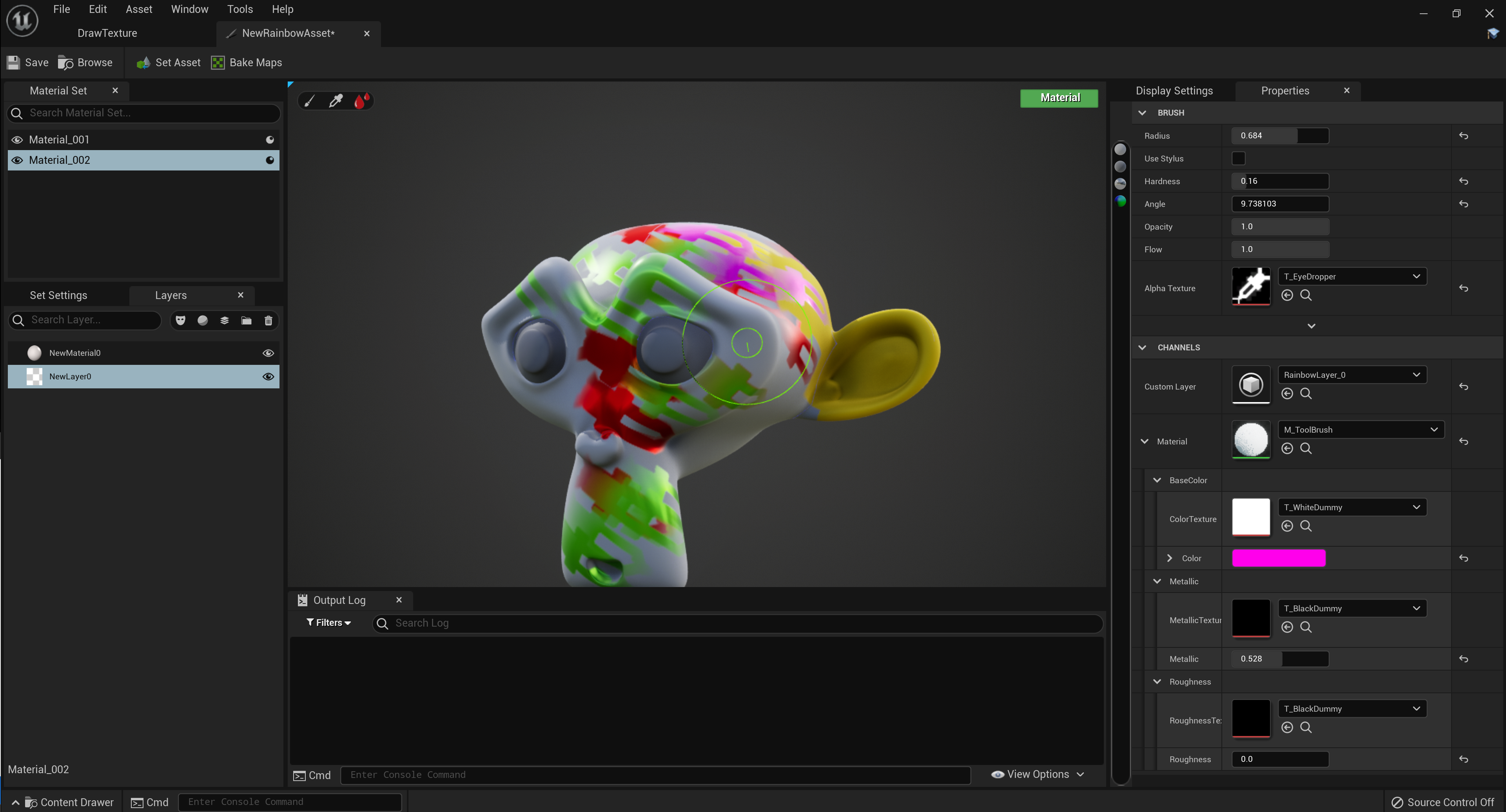

My project is called Rainbow, if you are interested here is a little image about how it looks inside UE5. It already paints channels and all, but only on 1 layer for now hahahh