I also no longer need to do manual glow mapping, since anything can be a light source.

I dare say that, barring skinned animation, the path tracer is complete. I guess I could also do bokeh. LOL

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

I also no longer need to do manual glow mapping, since anything can be a light source.

I dare say that, barring skinned animation, the path tracer is complete. I guess I could also do bokeh. LOL

Can you please elaborate on what you know about PBR materials?

Right now I pass in two textures to the shader – one for colour/opacity, and the other for reflectivity (and up to three more channels, for more effects).

taby said:

Can you please elaborate on what you know about PBR materials?

I can share my experience i've had with this. You saw my results from the projection image, which shows metal vs plastic balls. I used a grid of such balls to show roughness and metalness, like any PBR tutorial shows.

I've learned the math from learnopengl.com, which iirc was not hard to port to raytracing.

The hard part was importance sampling.

A pbr material has a complex function to define its reflectance, because it adds the fresnel term on top of something simple like the phong lighting model.

So the question ‘which directions should we sample, so the average of their results gives us a correct result in statistical sense?’ is hard to answer.

Idk which shading models have analytical solutions for this, but it's beyond my math skill to figure this out.

So instead i have used a stochastic alternation between a lambert distribution to sample the diffuse term, and some cone with angle from roughness for the specular.

Because that's an approximation, i had to weight the results individually as well, so my importance sampling was only half assed, but much better than nothing.

Aressera has answered related questions recently, mentioning a similar idea i think. But instead lambert and cone, he proposes to use phong distribution iirc, which sounds like a good idea to me. He has linked a paper about phong for RT.

I would ignore importance sampling at first and just get the shading right.

Once it works you can add IS to make it faster.

(I have avoided some related PT terminology, because i just lack the expertise : )

Yeah, but seems you have diffuse and sharp reflections, but you just mix them just naively. There is no roughness.

The metal should also tint the reflection to its own color, like gold does. So you need to give it a color to check this.

If you pay attention, you see that the lighting is realistic in your render, but the materials are not. They look like ‘too perfect’ i would say. Artificial, simplified, computer graphics stuff.

That's what PBR can fix.

Using a realistic model with proper textures might help. Some are on github, like here.

But i guess it's some work to import this.

Fortunately, I'm not the model / texture artist in our team. We'll see how things progress, in terms of the assets. We are working on skinning/bones animation next.

PBR in the meantime.

Sadly there is no common reference scene to verify PBR.

And i could not make a standard PBR material as used for games in tools like Blender either. There should be a way to do this, though.

Consequently, i do not know if my implementation is right or wrong. : (

I'll add implementation note:

Whenever working with importance sampling and various BRDFs - first - go back to the beginning and that's naive path tracing. Let your integrator (which should be correct!) run until you get smooth result and work from there - this is going to be your ground truth.

Now, you can start implementing importance sampling for specific BRDFs. You will be able to see/measure the difference against your ground truth - if there is any significant, you've got your importance sampling wrong.

…

Importance sampling is not going to improve your result nor change it in any way - it should only offer faster convergence rate compared to naive approach.

What is quite common (and I think Aressera mentioned it) is that you use a different function for importance sampling that is ‘close to’ what you need and still provides good results.

Deriving (or creating) sampling functions for BRDFs is non-trivial. To elaborate further:

In essence any generic BRDF will look like this:

Where:

Or to be precise - it is a function with 2 variable parameters and material definition. Note that physically based BRDFs will also be positive functions, obey Helmholz reciprocity and conserve energy, i.e.:

So - when we're talking importance sampling a BRDF, we start with famous rendering equation (I intentionally chose this form - to simplify by removing time and wavelength):

Where:

What is important is - how do we actually solve this equation. This is a Fredholm's integral equation of 2nd kind. Which in a generic template looks like:

Where:

The resemblance to the Rendering equation is I guess visible. So how do we solve it? By Liouville-Neumann series expansion (because we can clearly see - that we will have to recurrently perform integration) - and then performing a Monte-Carlo random walk on that (terminating at some point - due to series expansion being infinite).

The important part that comes out from L-N is that each subsequent step has smaller impact on the actual result - and the factor of how big that impact is - is defined by the K (which is BRDF in the Rendering equation). And also - once given step is taken, it impacts all the subsequent ones in given path.

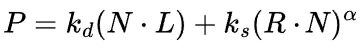

Now, let's get back to BRDF - it is a function that in 2D space (using w_i and w_o as axes x and y), looks like the following:

Second and third representations show 2 different samplings of the BRDF - in the first one, most of the samples will yield very low value (and therefore resulting sample will be contributing too low, while the second one will contribute far better - and therefore your convergence rate to the solution will be significantly faster).

Now - what your question is - how to generate sample distribution on the right, knowing the BRDF (and subsequently probability distribution function)?

This BRDF is defined as:

Which shows it is composed from diffuse term and specular term - due to us doing random walk and Phong doing 2 events and summing them - we can choose 1 for next step. So first step is decision whether diffuse or specular event happens.

For diffuse one, performing cosine-distribution is ideal (Why? It can be proved analytically based on how N.L behaves, as it literally is cosine of the angle between N and L; we want to select higher values with sampling - therefore cosine-weighted sampling).

The probability density function of such distribution is cos(x)/pi - these functions can be derived using formal definitions, i.e.:

Where:

Note - pdf is a continuous function that defines what's the likelyhood of getting certain sample! It has to integrate to 1. It is also related to cumulative distirbution function (it is its derivative).

For specular one - it is along obvious that we're sampling in cosine-weighted way (the dot product being cosine), it will factor reflected direction and also alpha parameter (shininess).

How do we know that?

Simply - we're trying to sample where the function is maximal. For the purpose of deriving this, it's good to write small utility showing you how N random samples would look like on top of the BRDF plotted to 2D space with incoming/outgoing direction (similar to image above).

Note: You have to analyze the BRDF function and find out where you get maximum values for for it. Then find a distribution which has a probability density function matching those maximum values.

Note: It is also possible to use approximation functions - which is what was suggested in different thread. I think I've also seen some thesis attempting to use some generic distribution functions with factors being tweaked for highly complex BRDFs (for which deriving distribution functions and their probability density functions would be very hard).

…

From the above you can see that while it seems straight forward, it's non-trivial (I tried to cut some corners and make it short enough for a forum post).