Sounds great. I shall consider working on such a thing, once I've got the basics down.

Thanks for clearing that up!

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

Sounds great. I shall consider working on such a thing, once I've got the basics down.

Thanks for clearing that up!

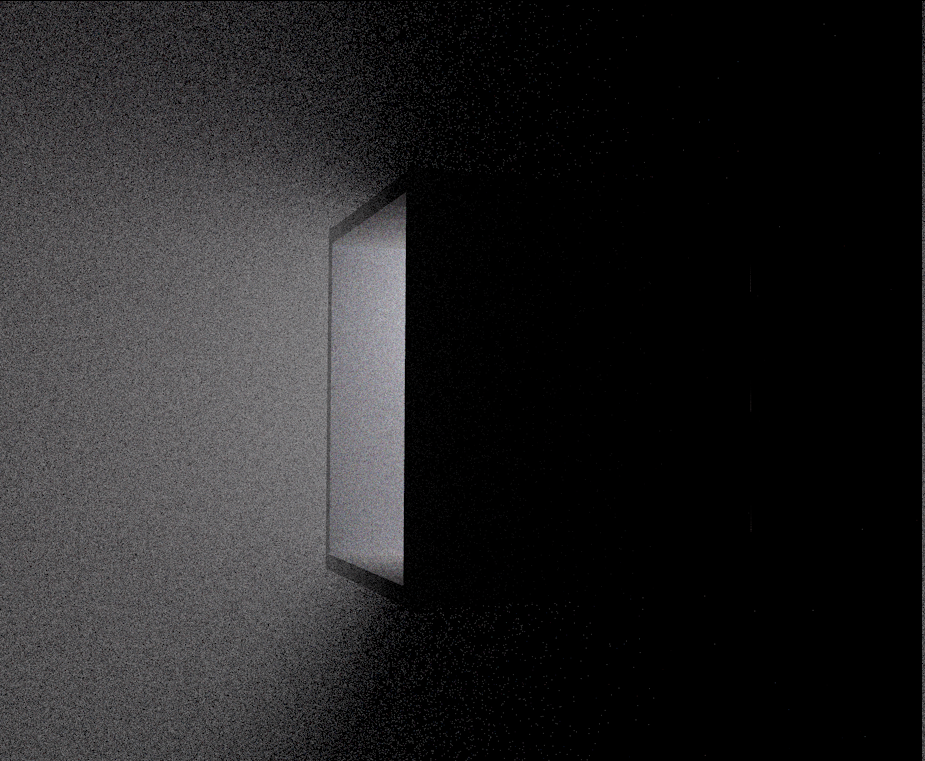

I've got fog working, sort of…

Here is a shot of the box glowing like a television in the fog:

Umm… having some experienced people here, i'd like to ask how to do progressive RT. I mean the process of averaging samples for a dynamic camera from previous frames.

Usually we render just a still image and everything is static. To have 30 spp, we just calculate 30 samples per pixels and make an average of all 30. It's the same as rendering 30 images and blending them uniformly.

If we extend this to animation, we could do the same but do only 1 sample per pixel per frame. And we might try to get the other 29 from previous frames (eventually reprojected).

Now my question: We can not store 29 previous frames. So what do we do? Using an exponential moving average i guess? Or is there better math specific to the problem?

Never did any realtime RT, and i'm curious about this since a long time. : )

JoeJ said:

Umm… having some experienced people here, i'd like to ask how to do progressive RT. I mean the process of averaging samples for a dynamic camera from previous frames.

This might be a bit more complicated based on how you defined progressive RT - do you mean progressive unbiased rendering? That means by increasing the number of samples you get close to the solution of rendering equation - and you can progressively add more and more samples into it?

JoeJ said:

Usually we render just a still image and everything is static. To have 30 spp, we just calculate 30 samples per pixels and make an average of all 30. It's the same as rendering 30 images and blending them uniformly.

The blending should work in theory - in practice it boils down on how do you have your blending implemented and whether you're not hitting a problem of numeric precision (which I assume you do solve correctly, otherwise the image degradation would be visible at 30spp).

JoeJ said:

If we extend this to animation, we could do the same but do only 1 sample per pixel per frame. And we might try to get the other 29 from previous frames (eventually reprojected). Now my question: We can not store 29 previous frames. So what do we do? Using an exponential moving average i guess? Or is there better math specific to the problem?

Well… it depends. The first thing is - progressively rendering scene with static camera, lights and objects. Which is possible and not that complex. Once you start moving with anything - it gets complicated (all this time I'm assuming you want unbiased solution).

Moving camera, static lights and objects - at this point you could use reprojection on data stored in resulting pixels, and calculate pixels that are not in reprojection with higher priority (which have to be cleared and start from scratch). Moving camera and moving either objects or lights (or both) - this is a big problem, you can't just re-project resulting pixels, because they are holding data from light paths that may not be valid anymore (it might have gotten occluded by an object, the object through which light path went moved, etc. etc.). It doesn't matter whether you use path tracing or progressive photon mapping (both are unbiased) - both will be invalid once anything moves in the scene.

There are approaches in path tracing how to store the data and work with them spatially and temporally, but not at the level of final pixels - but rather at the level of samples (used for multiple importance sampling), a lot over the scope of a single post here (or maybe even multiple). In short though - one of the main ways how to improve convergence of image (apart from explicit sampling) is importance sampling (or multiple importance sampling to be precise). There are ways to create and “cache” reservoirs of samples and use them in an unbiased manner in either spatial manner or temporal manner (or both - which is what ReSTIR does). To ensure that this is unbiased you also need to discard sample reservoirs that are invalid (which could also be due to animation), and generate new ones. This means that you're not storing images though, but rather sample reservoirs from which you draw.

Iq did a loose implementation of the paper in an interactive manner on ShaderToy (although it is biased):

He had moving camera (not light nor objects) - the results can be also seen live here - https://www.shadertoy.com/view/stVfWc - if I'm not mistaken it uses 2 samples for AO computation.

Vilem Otte said:

This might be a bit more complicated based on how you defined progressive RT - do you mean progressive unbiased rendering? That means by increasing the number of samples you get close to the solution of rendering equation - and you can progressively add more and more samples into it?

No, i would call what you describe as ‘accumulating random samples is just how Monte Carlo Integration works’, which applies to many things like choosing a ray to a random sub pixel to get AA, or choosing random secondary rays directions. Summing those samples up and averaging them is an iterative integration process, but not ‘progressive’.

IIRC 'progressive RT' means interactive / realtime rendering, which differs from the above still image method because we can't easily tell how much valid samples we have, so we no longer can do a simple average.

But i'm not really sure about the term. Likely i have picked it up at the times when Brigade showed first realtime PT.

AFAICT Brigade has not yet used something like modern denoisers. There was no spatio / temporal filters attempting to use samples from neighbouring pixels.

Likely they used very simple method, e.g. exponential moving average, controlled by some alpha parameter depending on variance. So a new sample of a formerly occluded pixel overrides invalid history with a big weight, while a new sample for a temporally stable pixel gets a low weight to accumulate more samples and increase precision.

Vilem Otte said:

at this point you could use reprojection on data stored in resulting pixels, and calculate pixels that are not in reprojection with higher priority (which have to be cleared and start from scratch).

Yeah, something like that. Something a RT newbie can implement to get started with realtime RT, postponing the need to dig into denoising.

But i never came across any tutorials or introductions discussing this topic. So i wonder if i have just missed it, or maybe there is no such simple, well known and widely used method.

Vilem Otte said:

Moving camera and moving either objects or lights (or both) - this is a big problem, you can't just re-project resulting pixels, because they are holding data from light paths that may not be valid anymore (it might have gotten occluded by an object, the object through which light path went moved, etc. etc.).

OT, but this makes me wonder: Some people already have problems accepting the temporal smear happening with TAA some times. Others have problems with disocclusion artifacts of SSAO or SSR.

What do those people think about RT? There seem almost no complaints regarding temporal stability, but maybe they just gave up complaining.

Vilem Otte said:

There are ways to create and “cache” reservoirs of samples and use them in an unbiased manner in either spatial manner or temporal manner (or both - which is what ReSTIR does).

Now that you say this, i wonder if the claim of being unbiased then also depends on accepting a temporal range of error. Basically like saying ‘We have bias, but only temporally. We still converge towards the correct solution, so let's call it unbiased.’ : )

(But no rant intended. I don't think unbiased results are a priority for realtime, as long error is reasonably bound.)

I started writing a paper:

https://github.com/sjhalayka/cornell_box_textured/blob/main/caustics/caustics.pdf

JoeJ said:

Now that you say this, i wonder if the claim of being unbiased then also depends on accepting a temporal range of error. Basically like saying ‘We have bias, but only temporally. We still converge towards the correct solution, so let's call it unbiased.’ : ) (But no rant intended. I don't think unbiased results are a priority for realtime, as long error is reasonably bound.)

I do remember seeing actual mathematical proof of ReSTIR being unbiased with both spatial and temporal filtering (keep in mind, you're storing and using reservoirs of sample points - discarding those that won't be temporally valid anymore … so technically it is possible that you may have to occasionally discard ALL of them).

JoeJ said:

OT, but this makes me wonder: Some people already have problems accepting the temporal smear happening with TAA some times. Others have problems with disocclusion artifacts of SSAO or SSR. What do those people think about RT? There seem almost no complaints regarding temporal stability, but maybe they just gave up complaining.

If I remember correctly I literally did full MSAA and it was fast enough some time ago (I dragged myself to finally do it after one thread here - I think you participated). I'm still using that pipeline even now (yeah, everything is done in compute shaders). I do heavily dislike smear artifacts, disocclusion artifacts, etc.

JoeJ said:

Yeah, something like that. Something a RT newbie can implement to get started with realtime RT, postponing the need to dig into denoising. But i never came across any tutorials or introductions discussing this topic. So i wonder if i have just missed it, or maybe there is no such simple, well known and widely used method.

Doing reprojection isn't hard - Temporal reprojection (shadertoy.com) - this is just one of many examples there. It literally takes previous frame accumulated value and adds a new one - you do need additional information for reprojection though! It is one of the simple ways how to improve image quality to be more smooth. It does introduce its own set of problems.

I considered and tried doing something similar for the DOOM entry here in compo back then, but it ended up slower than increasing number of samples (plus there was rather huge skylight (sky dome) and direct light - those could be explicitly sampled). The noise was present only in shadows and wasn't that terrible (on enough high-end card though, that's quite important detail to be said). Truth to be told though, the supporting code for the whole project is all, but small and simple.

JoeJ said:

No, i would call what you describe as ‘accumulating random samples is just how Monte Carlo Integration works’, which applies to many things like choosing a ray to a random sub pixel to get AA, or choosing random secondary rays directions. Summing those samples up and averaging them is an iterative integration process, but not ‘progressive’. IIRC 'progressive RT' means interactive / realtime rendering, which differs from the above still image method because we can't easily tell how much valid samples we have, so we no longer can do a simple average. But i'm not really sure about the term. Likely i have picked it up at the times when Brigade showed first realtime PT. AFAICT Brigade has not yet used something like modern denoisers. There was no spatio / temporal filters attempting to use samples from neighbouring pixels. Likely they used very simple method, e.g. exponential moving average, controlled by some alpha parameter depending on variance. So a new sample of a formerly occluded pixel overrides invalid history with a big weight, while a new sample for a temporally stable pixel gets a low weight to accumulate more samples and increase precision.

Unbiased progressive rendering algorithms have one property - at any point you can present them to user and then continue computation that will converge towards actual solution. Path tracing and progressive photon mapping can be used in this way (for very simple SPPM implementation you can look at - https://www.shadertoy.com/view/WtS3DG - beware though, photon mapping without acceleration structure will eventually converge at VERY slow rate, although you can clearly see its massive advantage for caustics).

If I recall correctly Brigade did do a proper sample average (but I might be wrong here). Just explicit sampling of lights together with cosine-weighted importance sampling of diffuse surfaces - can produce useful image with few spp (given “enough light” in the scene).

At that time I worked on my thesis and faced similar problem, how to progressively accumulate samples - I ended up using 32-bit floating point format where each channel held sum of all previous samples, plus you were adding additional one into it. The number of current samples was held by the application on CPU side and incremented by N each frame. Each time camera moved, object moved or light moved - the N was reset and surface holding the sums cleared to 0. User could specify maximum N that was used (otherwise it went into “infinity” - I never saw numerical precision issues, but they would happen eventually). The N could be either static (set by user) or variable (based on previous frame time - to keep rendering ‘interactive’ … yet minimum was always 1).

One of the different approaches I've tried at some point was holding samples per-pixel in the A channel of final result texture. The idea was simple, yet stupid - throw more samples on pixels where explicit sampling of light(s) failed, thus reducing noise in shadowed areas faster than elsewhere. It introduces a whole lot of problems though (directly lit areas also lit by indirect light tend to still be noisy, caustics introduce whole set of issues, etc.). There was no need to be unbiased in this case and speed was important factor.

It is hard to tell what will work in your case better/worse (it may also be heavily dependent on how your scenes look like, and your requirements).

taby said:

I started writing a paper:

As a documentation it might be fine, if you're going to submit it to reviewers - make absolutely sure that your theory/math is fully correct.

I did a quick look at it and here are my few notes (don't take them as something trying to prevent you from writing it - but rather a point of a look from someone who released and reviewed some):

This is what currently bugs me a bit in there - and you would get this-like thing from reviewers at some point (if submitted).

The caustics bug me though - they do seem to converge quite fast, but they just doesn't seem correct to me.

I'm such a moron. I meant to say backward instead of forward.

I will definitely take what you say to heart. Thank you for the sincere review.

I just looked at it out of curiosity, and these were few things that bugged me in there (I'm probably nitpicking quite too much and too early - it's still missing a lot of text).