I know why it’s too bright. Let me experiment with the code, to correct the problem.

🎉 Celebrating 25 Years of GameDev.net! 🎉

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

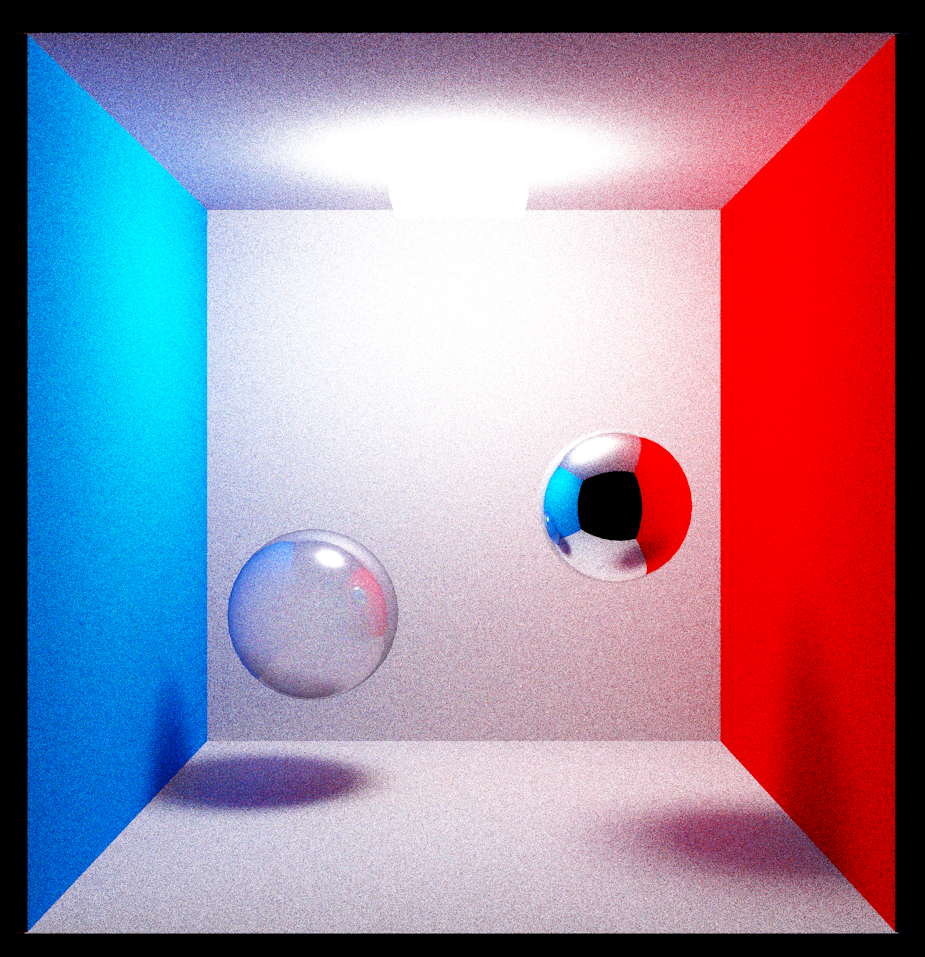

Path tracing in Vulkan

You should be able to reduce brightness by just changing the starting energy of the ray to something other than 1.

Please explain this to me in terms that a 5-year old. LOL

I am not starting the ray with energy. I understand what you mean physically, but I don't know how to quite implement that in code yet.

Thanks for your comments.

You had a line of code in your shader like local_color = 1, just change the value you set the starting color to.

Right! Sorry about that. The code is now:

float local_colour = 0;

for(int i = 0; i < steps; i++)

{

float tmin = 0.001;

float tmax = 1000.0;

traceRayEXT(topLevelAS, gl_RayFlagsOpaqueEXT, 0xff, 0, 0, 0, o.xyz, tmin, d.xyz, tmax, 0);

if(channel == red_channel)

local_colour += rayPayload.color.r;

else if(channel == green_channel)

local_colour += rayPayload.color.g;

else

local_colour += rayPayload.color.b;

...when I change it to use multiplication, I get unusable results.

Think about it conceptually. What you are doing by tracing a path from camera into the scene is to gather how much light is being transmitted along the path. Due to the principle of reciprocity, it doesn't matter which way the path is traced, the energy transmitted along it is the same. This is why you can trace rays from either camera or lights and get the same result after convergence (very large number of samples per pixel).

At each step along the path, you gather all light arriving at that surface from all directions. The light intensity received by the surface includes both direct light and indirect light (i.e. further bounces). This is described by the Rendering Equation, which says that the outgoing intensity L_r (i.e. in camera direction) is equal to emitted intensity L_e plus the integral over all directions of the surface BRDF f_r multiplied with the incoming light intensity L_i:

So, when you trace an indirect bounce you are sampling (taking an estimate) of the value of L_i for the deeper segments of the path. When you trace many samples per pixel the variance due to sampling will average out to a smooth image, because the estimate of L_i at each bounce becomes better.

To sample the value of L_i, you must include direct and indirect light. Direct light is easy, it is the contribution from any light sources in the scene. You can just trace a ray from the surface to a randomly chosen point on each light source, then use inverse square law to calculate the intensity received by the surface point. The proper term for this is "next event estimation".

Indirect light comes from the subsequent bounces of the ray. This process can be described by the following pseudocode. It omits a few details, but has the important parts for calculating the pixel color correctly.

float brightness = 1.0f / maxSamples; // Adjust overall exposure

vec3 pixelColor = vec3( 0.0f );

float minDistance = 0.001;

float maxDistance = 1000.0;

for ( int sample = 0; sample < maxSamples; sample++ )

{

vec3 rayPoint = samplePixelPoint( RNG ); // Point on near plane within pixel area

vec3 rayDirection = getPixelDirection( rayPoint );

vec3 sampleAccumulator = vec( 0.0f );

vec3 pathIntensity = vec3( brightness );

for ( int depth = 0; depth < maxDepth; depth++ )

{

// Trace ray, find hit point.

bool hit, vec3 normal, float distance = traceRay( rayPoint, rayDirection, minDistance, maxDistance );

if ( !hit )

break;

vec3 hitPoint = rayPoint + rayDirection*distance;

// Gather direct light (next event estimation)

for ( int l = 0; l < lightCount; l++ )

{

vec3 lightPoint, float pdf = samplePointOnLight( l, RNG );

vec3 lightDirection = lightPoint - hitPoint;

float lightDistance = length(lightDirection);

bool inShadow = traceRay( hitPoint, lightDirection, minDistance, lightDistance - minDistance );

if ( inShadow )

continue;

sampleAccumulator += pathIntensity *

brdf.evaluate( rayDirection, lightDirection/lightDistance, normal ) *

lightIntensity[l] / (lightDistance * lightDistance * pdf);

}

// Gather indirect light by tracing more bounces

// Generate random outgoing ray, with probability pdf (important)

vec3 outDirection, float pdf = brdf.sample( rayDirection, normal, RNG );

// Apply BRDF to current ray intensity, divide by PDF to get correct result

pathIntensity *= brdf.evaluate( rayDirection, outDirection, normal ) / pdf;

rayDirection = outDirection;

rayPoint = hitPoint;

}

// Add sample color to pixel accumulator.

pixelColor += sampleAccumulator; // No need to divide by maxSamples, it is done in the starting intensity

}To make this work you need a few important BRDF functions. You need a brdf.sample() function which takes in an incoming direction (the ray direction) and generates a random outgoing direction with probability given by the PDF for the BRDF. You need to know the probability so that you can compensate for it by dividing by the PDF. It you don't do this your results will be wrong.

You also need a brdf.evaluate() function that tells you how much light is transmitted by the BRDF for a known input and output direction. Start with the Lambertian BRDF, it is the simplest. It's also easiest to work in the surface-aligned coordinate system with normal as the Z axis. So, you would want to generate a 3x3 matrix for the surface reference frame (defined by tangent, bitangent, normal vectors), use this matrix to rotate things into the local space so that the perpendicular direction is always (0,0,1). Then your BRDF math becomes much simpler.

The material color is technically included as part of the BRDF, though you could multiply it outside the call to brdf.evaluate() if that is easier for the code.

You are a god. Thank you so much. I will study your response over the next few days. I look forward to studying the code, in particular.

OK, one big problem: my code does not specify a light source, not even once. So, even though I have caustics working, I did not have to do backward or bidirectional path tracing. Is this a new thing?

Path tracers dont need to know if there are light sources in the scene. Its a radiosity renderer, which means it figures out the illumination in the scene on its own. When you make a material emmisive (channel color values higher than 1) in a path tracer this material starts acting as a light for the scene. This is also why you dont spawn secondary rays on bounces to figure out if something is in shadow or view of the light.